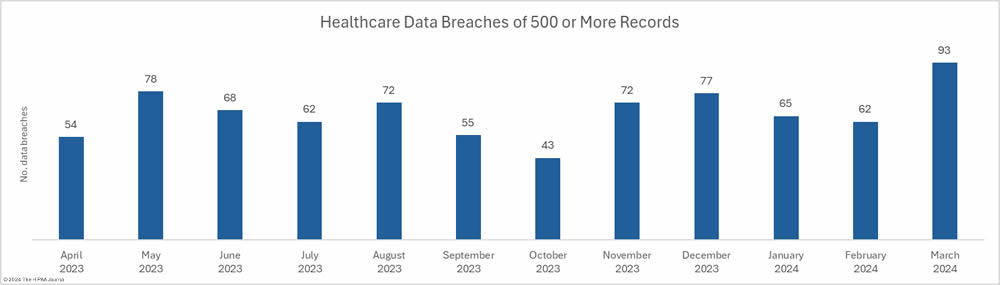

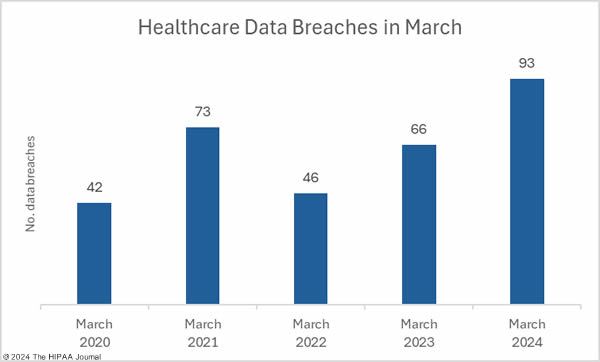

March was a particularly bad month for healthcare data breaches with 93 branches of 500 or more records reported to the Department of Health and Human Services (HHS) Office for Civil Rights (OCR), a 50% increase from February and a 41% year-over-year increase from March 2023. The last time more than 90 data breaches were reported in a single month was September 2020.

The reason for the exceptionally high number of data breaches was a cyberattack on the rehabilitation and long-term acute care hospital operator Ernest Health. When a health system experiences a breach that affects multiple hospitals, the breach is usually reported as a single breach. In this case, the breach was reported individually for each of the 31 affected hospitals. Had the breach been reported to OCR as a single breach, the month’s breach total would have been 60, well below the average of 66.75 breaches a month over the past 12 months.

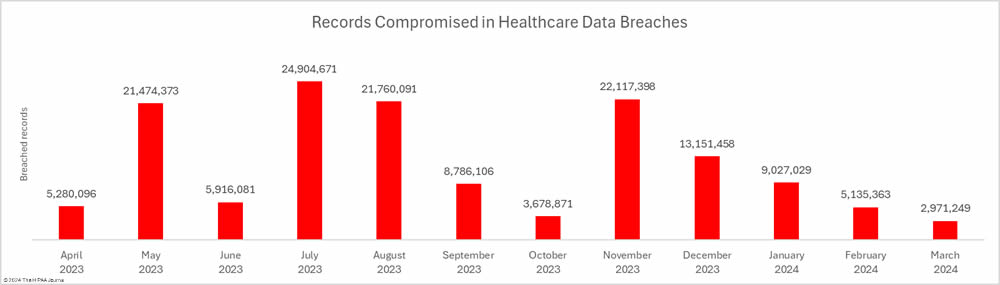

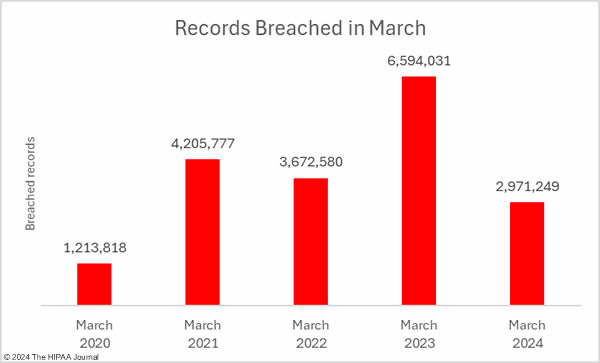

While the breach total was high, the number of individuals affected by healthcare data breaches fell for the fourth consecutive month to the lowest monthly total since January 2023. Across the 93 reported data breaches, the protected health information of 2,971, 249 individuals was exposed or impermissibly disclosed – the lowest total for March since 2020.

Biggest Healthcare Data Breaches in March 2024

18 data breaches were reported in March that involved the protected health information of 10,000 or more individuals, all of which were hacking incidents. The largest breach of the month was reported by the Pennsylvanian dental care provider, Risa’s Dental and Braces. While the breach was reported in March, it occurred 8 months previously in July 2023. A similarly sized breach was reported by Oklahoma’s largest emergency medical care provider, Emergency Medical Services Authority. Hackers gained access to its network in February and stole files containing names, addresses, dates of birth, and Social Security numbers.

Philips Respironics, a provider of respiratory care products, initially reported a hacking-related breach to OCR involving the PHI of 457,152 individuals. Hackers gained access to the network of the Queens, NY-based billing service provider M&D Capital Premier Billing in July 2023, and stole files containing the PHI of 284,326 individuals, an August 2023 hacking incident was reported by Yakima Valley Radiology in Washington that involved the PHI of 235,249 individuals, and the California debt collection firm Designed Receivable Solutions, experienced a breach of the PHI of 129,584 individuals. The details of the breach are not known as there has been no public announcement other than the breach report to OCR.

| Name of Covered Entity | State | Covered Entity Type | Individuals Affected | Breach Cause |

| Risas Dental & Braces | PA | Healthcare Provider | 618,189 | Hacking Incident |

| Emergency Medical Services Authority | OK | Healthcare Provider | 611,743 | Hacking Incident |

| Philips Respironics | PA | Business Associate | 457,152 | Exploited software vulnerability (MoveIT Transfer) |

| M&D Capital Premier Billing LLC | NY | Business Associate | 284,326 | Hacking Incident |

| Yakima Valley Radiology, PC | WA | Healthcare Provider | 235,249 | Hacked email account |

| Designed Receivable Solutions, Inc. | CA | Business Associate | 129,584 | Hacking Incident |

| University of Wisconsin Hospitals and Clinics Authority | WI | Healthcare Provider | 85,902 | Compromised email account |

| Aveanna Healthcare | GA | Healthcare Provider | 65,482 | Compromised email account |

| Ezras Choilim Health Center, Inc. | NY | Healthcare Provider | 59,861 | Hacking Incident (data theft confirmed) |

| Valley Oaks Health | IN | Healthcare Provider | 50,034 | Hacking Incident |

| Family Health Center | MI | Healthcare Provider | 33,240 | Ransomware attack |

| CCM Health | MN | Healthcare Provider | 28,760 | Hacking Incident |

| Weirton Medical Center | WV | Healthcare Provider | 26,793 | Hacking Incident |

| Pembina County Memorial Hospital | ND | Healthcare Provider | 23,811 | Hacking Incident (data theft confirmed) |

| R1 RCM Inc. | IL | Business Associate | 16,121 | Hacking Incident (data theft confirmed) |

| Ethos, also known as Southwest Boston Senior Services | MA | Business Associate | 14,503 | Hacking Incident |

| Pomona Valley Hospital Medical Center | CA | Healthcare Provider | 13,345 | Ransomware attack on subcontractor of a vendor |

| Rancho Family Medical Group, Inc. | CA | Healthcare Provider | 10,480 | Cyberattack on business associate (KMJ Health Solutions) |

Data Breach Causes and Location of Compromised PHI

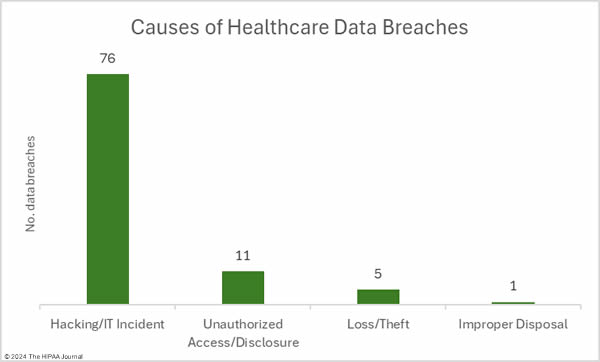

As has been the case for many months, hacking incidents dominated the breach reports. 76 of the month’s breaches were classed as hacking/IT incidents, which involved the records of 2,918,585 individuals, which is 98.2% of all records compromised in March. The average breach size was 38,402 records and the median breach size was 3,144 records. The nature of the hacking incidents is getting harder to determine as little information about the incidents is typically disclosed in breach notifications, such as whether ransomware or malware was used. The lack of information makes it hard for the individuals affected by the breach to assess the level of risk they face. Many of these breaches were explained as “cyberattacks that caused network disruption” in breach notices, which suggests they were ransomware attacks.

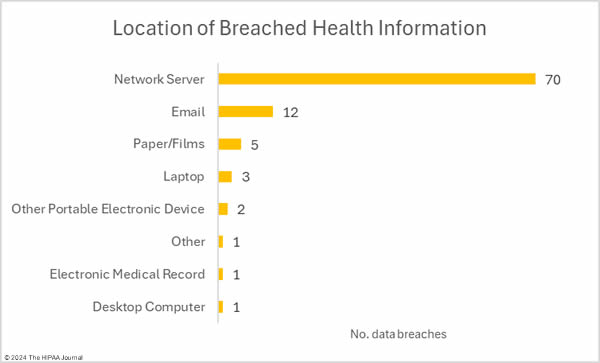

There were 11 unauthorized access/disclosure incidents reported involving a total of 36,533 records. The average breach size was 3,321 records and the median breach size was 1,956 records. There were 4 theft incidents and 1 loss incident, involving a total of 15,631 records (average: 3,126 records; median 3,716 records), and one improper disposal incident involving an estimated 500 records. The most common location for breached PHI was network servers, which is to be expected based on the number of hacking incidents, followed by compromised email accounts.

Where Did the Data Breaches Occur?

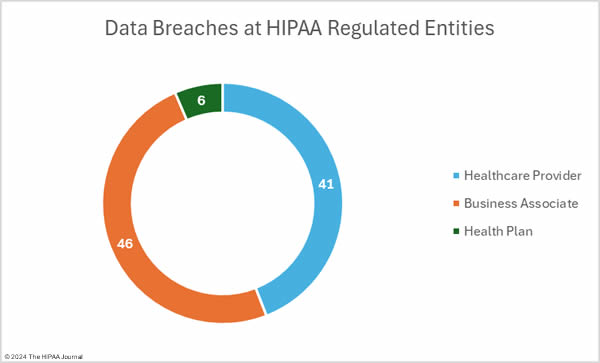

The OCR data breach portal shows there were 77 data breaches at healthcare providers (2,030,568 records), 10 breaches at business associates (920,522 records), and 6 data breaches at health plans (20,159 records). As OCR recently confirmed in its Q&A for healthcare providers affected by the Change Healthcare ransomware attack, it is the responsibility of the covered entity to report breaches of protected health information when the breach occurs at a business associate; however, the responsibility for issuing notifications can be delegated to the business associate. In some cases, data breaches at business associates are reported by the business associate for some of the affected covered entity clients, with some covered entities deciding to issue notifications themselves. That means that data breaches at business associates are often not abundantly clear on the breach portal. The HIPAA Journal has determined the location of the breaches, with the pie charts below show where the breaches occurred, rather than the entity that reported the breach.

Geographical Distribution of Healthcare Data Breaches

In March, data breaches were reported by HIPAA-regulated entities in 33 U.S. states. Texas was the worst affected state with 16 breaches reported, although 8 of those breaches were reported by Ernest Health hospitals that had data compromised in the same incident. California experienced 10 breaches, including 3 at Ernest Health hospitals, with New York also badly affected with 7 reported breaches.

| State | Breaches |

| Texas | 16 |

| California | 10 |

| New York | 7 |

| Pennsylvania | 6 |

| Indiana | 5 |

| Colorado & Florida | 4 |

| Illinois, Ohio & South Carolina | 3 |

| Arizona, Idaho, Massachusetts, Michigan, Minnesota, New Mexico, North Carolina, Oklahoma & Utah | 2 |

| Alabama, Georgia, Kansas, Kentucky, Nevada, New Jersey, North Dakota, Oregon, Tennessee, Virginia, Washington, West Virginia, Wisconsin & Wyoming | 1 |

HIPAA Enforcement Activity in March 2024

OCR announced one settlement with a HIPAA-regulated entity in March to resolve alleged violations of the HIPAA Rules. The Oklahoma-based nursing care company Phoenix Healthcare was determined to have failed to provide a daughter with a copy of her mother’s records when the daughter was the personal representative of her mother. It took 323 days for the records to be provided, which OCR determined was a clear violation of the HIPAA Right of Access and proposed a financial penalty of $250,000.

Phoenix Healthcare requested a hearing before an Administrative Law Judge, who upheld the violations but reduced the penalty to $75,000. Phoenix Healthcare appealed the penalty and the Departmental Appeals Board affirmed the ALJ’s decision; however, OCR offered Phoenix Healthcare the opportunity to settle the alleged violations for $35,000, provided that Phoenix Healthcare agreed not to challenge the Departmental Appeals Board’s decision.

The post March 2024 Healthcare Data Breach Report appeared first on HIPAA Journal.